This is the story of how we launched our project ZeroStep, and how someone copied our repo and reached the top of the subreddits r/programming, r/frontend, and r/QualityAssurance two days later.

The launch

We launched ZeroStep on Thursday. It’s a library that adds AI-based test steps and assertions to the Playwright test framework. It’s comprised of two components:

- An open-source JavaScript library that drives the browser using plain-text

prompts. Prompts are executed via a single

ai()function that automatically resolves to an action, an assertion, or a question. - A proprietary backend that establishes a WebSocket connection with the JavaScript client. Text prompts are sent to the backend, along with a screenshot and DOM snapshot of the caller’s current browser state. Through an integration with OpenAI, along with some secret sauce, the backend determines the set of Playwright commands that fulfills the prompt, and emits them to the client via the WebSocket connection.

Here’s an example test that uses our library to validate a scenario on GitHub:

|

|

The AI used in ZeroStep is the same AI we shipped six months ago in our low-code test platform Reflect, and we’ve been improving it ever since. We decided to spin out this AI technology into a separate library because we’ve proven it works (two-thirds of Reflect customers now use it), and because we want to try to reach developers who want to use AI in their tests, but prefer to use Playwright over a low-code tool.

Our launch on Thursday didn’t get the massive reaction we were hoping for, but sentiment overall was positive. We received 12 upvotes on our “Show HN” Hacker News post which yielded some decent traffic and sign-ups. Our posts on a few subreddits received a few upvotes, but a surprisingly decent amount of traffic came from our /r/programming submission even though it had 0 total upvotes:

Overall we were happy to get some usage, and logged off on Friday to recharge.

Cloned over a weekend

On Saturday evening I was browsing Reddit when I saw a post on /r/QualityAssurance by the Reddit user lucgagan about a project very similar to ours:

It’s not too surprising — there are lots of startups and open-source projects trying to apply AI to various different use-cases. But on closer inspection, it became clear that this was not just another project with the same idea:

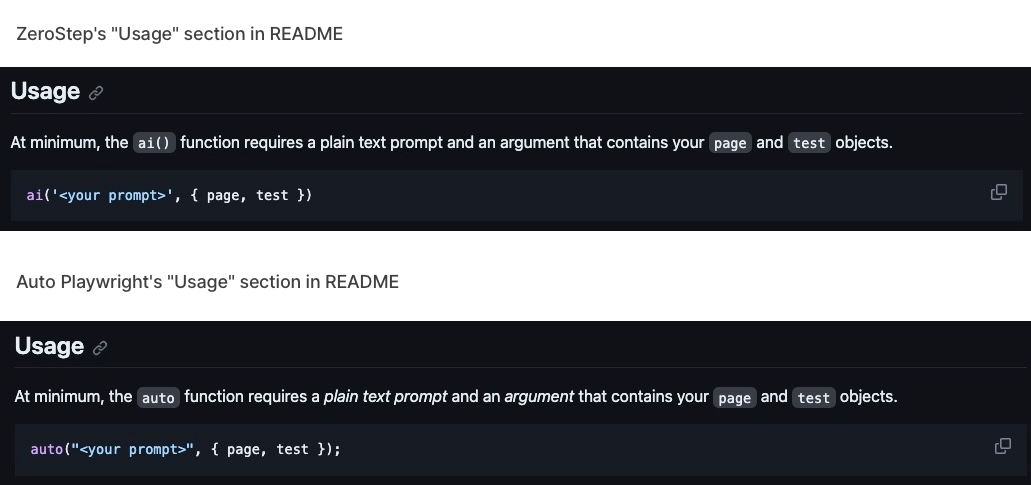

1. lucgagan’s JavaScript library exposes an auto() function that supports actions, assertions, and queries, just

like ZeroStep:

2. Portions of our README were copy/pasted, with the only change being renaming ai() to auto():

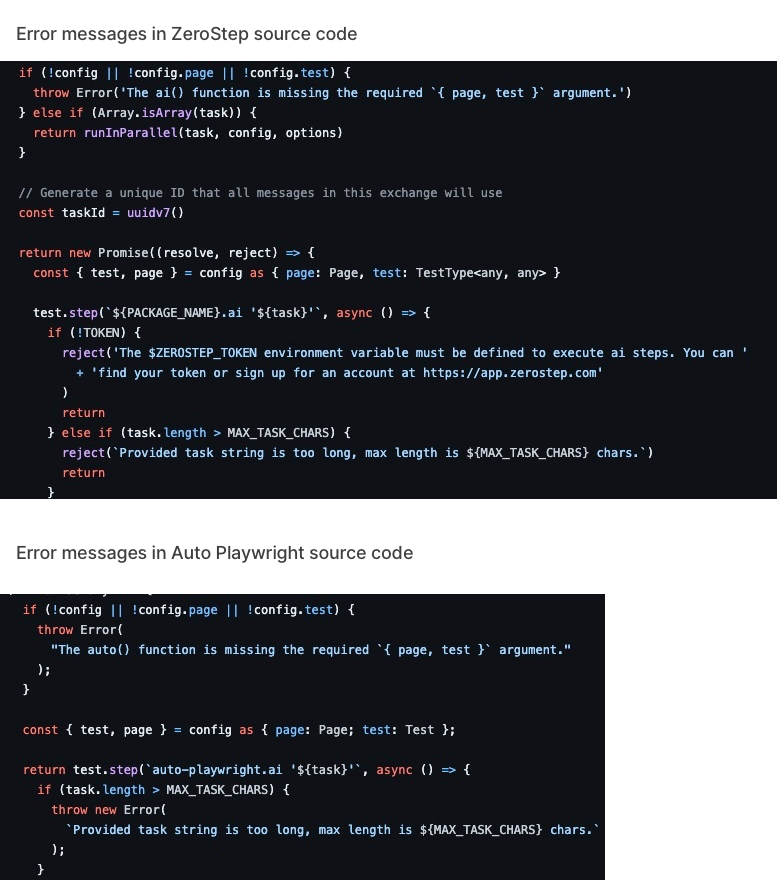

3. In the codebase itself, we found many examples of code taken straight from our repo:

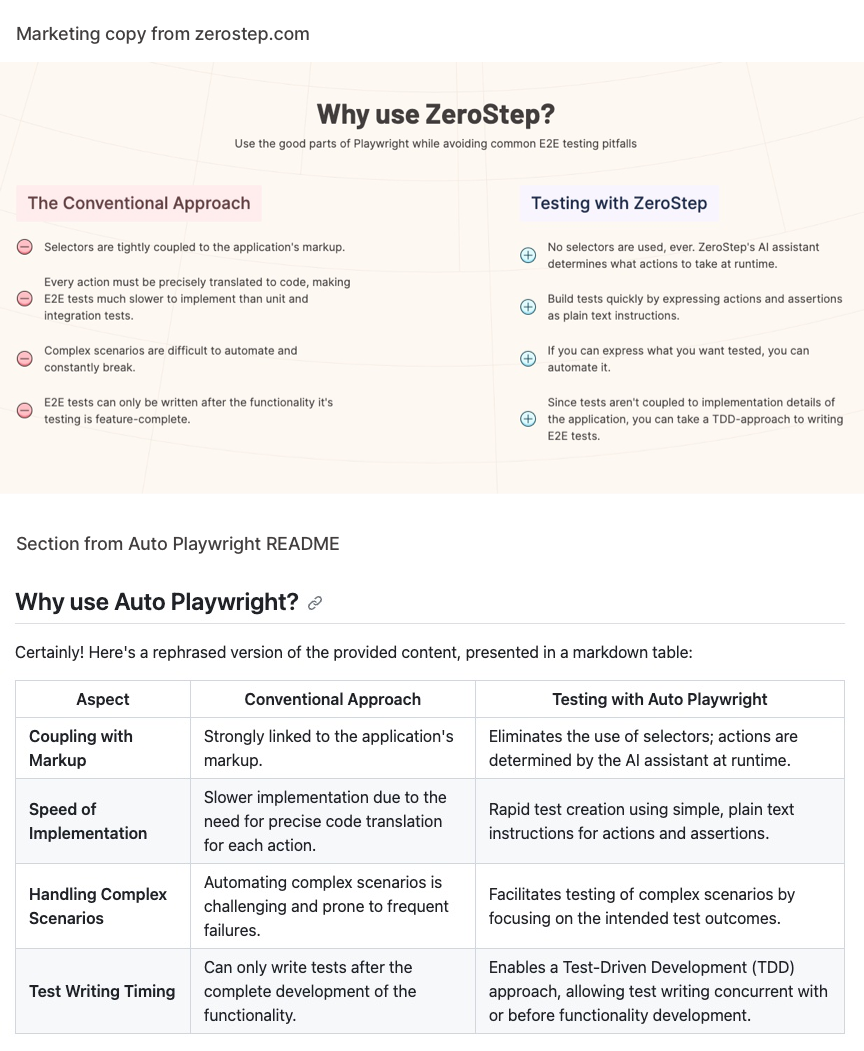

4. Marketing copy from our website showed up in lucgagan’s project, with only some cursory rewrites:

Based on this evidence, it was clear that this person had cloned our project without any sort of attribution, and further, had copied our marketing messaging as well.

To add insult to injury, lucgagan’s posts about this clone became the most upvoted posts on /r/programming, /r/frontend, and /r/QualityAssurance on Saturday evening…

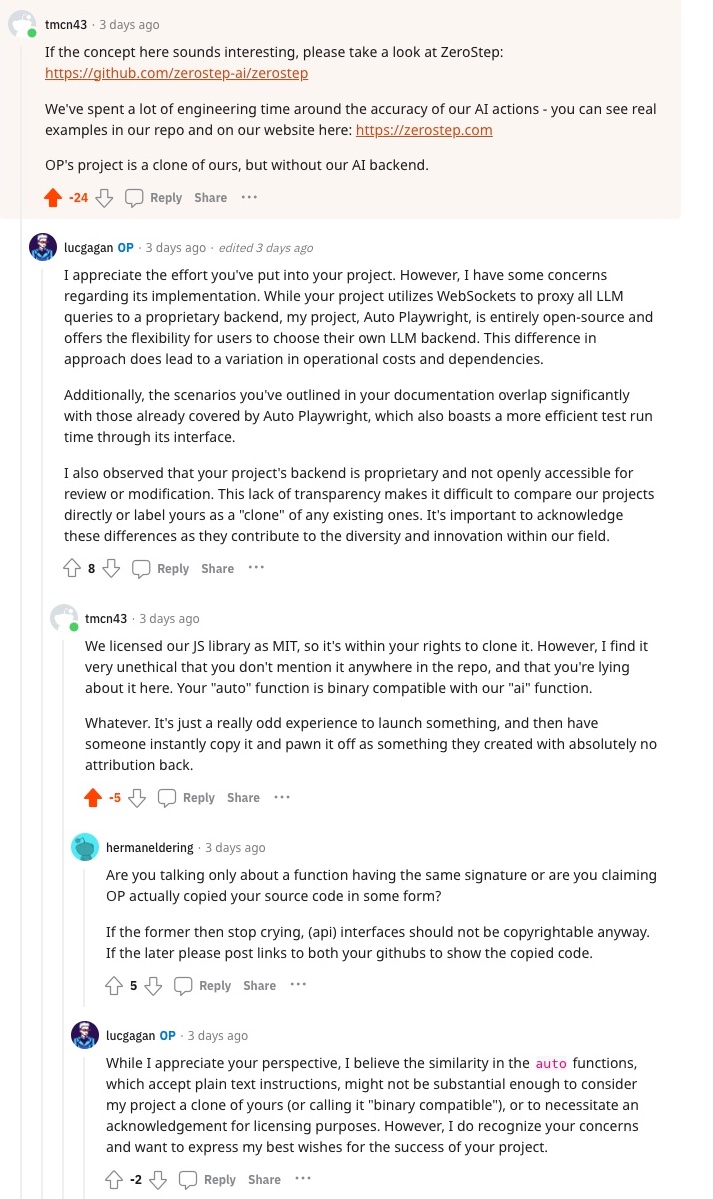

I added a comment to the /r/programming post to make it known this was a clone of our project, but it ended up getting heavily downvoted, with a comment from the project creator lucgagan effectively playing dumb about cloning our project:

Ethics in Open Source

Given our JavaScript library is open-source under the MIT license, it’s perfectly fine for users to fork our project and reimplement it to use OpenAI instead of our proprietary backend. However, I firmly believe that the intent here was not to provide an alternative that uses an alternative LLM framework. I believe lucgagan instead intended to co-opt our project and market it as their own creation.

Because the clone replaces our backend with simple OpenAI prompts, the auto() functions will return incorrect results

for all but the most trivial of cases. I think it’s clear lucgagan knows this, since the only thing beyond calls to

our backend that they didn’t include in the clone is our

end-to-end tests,

which demonstrates ZeroStep functioning correctly in non-trivial scenarios against sites like GitHub, The New York

Times, and Google. We believe the accuracy of the AI is an essential feature of this project, and it’s unfortunate that

the users of auto playwright didn’t get to see experience that.

Moving forward

Unfortunately, dealing with bad actors is the cost of business of running a startup. Solo entrepreneurs spending their limited resources on dealing with credit card fraud, and VCs masquarading as sales prospects in order to market research for a competing project are just two of the recent examples of bad behavior that I’ve come across.

In the end, there were some positives to what happened:

- In some sense, the popularity of the clone project validates what we’re building.

- We can take some of the feedback on the clone’s Reddit posts and apply that to our project.

- We can learn from lucgagan’s success in getting their posts upvoted. In particular, I thought it was clever how they linked to a blog post explaining the project, rather than linking directly to a standalone marketing site. It made it seem more informational and less salesy.

There are many ups-and-downs in a startup. Ultimately this is just one blip in our journey. We’re looking forward to continuing to iterate on ZeroStep. If you’re interested in utilizing AI in your existing Playwright tests, please consider giving it a try!